Pine wilt disease detection in high-resolution UAV images using object-oriented classification

Zhao Sun · Yifu Wang · Lei Pan · Yunhong Xie ·Bo Zhang · Ruiting Liang · Yujun Sun

Abstract Pine wilt disease (PWD) is currently one of the main causes of large-scale forest destruction.To control the spread of PWD, it is essential to detect affected pine trees quickly.This study investigated the feasibility of using the object-oriented multi-scale segmentation algorithm to identify trees discolored by PWD.We used an unmanned aerial vehicle (UAV) platform equipped with an RGB digital camera to obtain high spatial resolution images, and multiscale segmentation was applied to delineate the tree crown,coupling the use of object-oriented classification to classify trees discolored by PWD.Then, the optimal segmentation scale was implemented using the estimation of scale parameter (ESP2) plug-in.The feature space of the segmentation results was optimized, and appropriate features were selected for classification.The results showed that the optimal scale, shape, and compactness values of the tree crown segmentation algorithm were 56, 0.5, and 0.8, respectively.The producer’s accuracy (PA), user’s accuracy (UA), and F1 score were 0.722, 0.605, and 0.658, respectively.There were no significant classification errors in the final classification results, and the low accuracy was attributed to the low number of objects count caused by incorrect segmentation.The multi-scale segmentation and object-oriented classification method could accurately identify trees discolored by PWD with a straightforward and rapid processing.This study provides a technical method for monitoring the occurrence of PWD and identifying the discolored trees of disease using UAV-based high-resolution images.

Keywords Object-oriented classification·Multi-scale segmentation·UAV images·Pine wilt disease

Introduction

Annually, more than 12.78 million hectares in China are seriously damaged by forest pests (Sun et al.2021).Pests and diseases are not only the main causes of forest degradation (Montagne-Huck and Brunette 2018), but also cause extensive economic losses (Yemshanov et al.2009) from the loss of forest assets, the discounted cost of the treatment,removal, and replacement of damaged trees (Kovacs et al.2011; Chang et al.2012), and the losses related to forest recreation, landscape value (Bigsby et al.2014), and carbon sequestration (Kim et al.2018).

Pine wilt disease (PWD) caused by the pinewood nematode (PWN) is the most destructive forest disease in global forest ecosystems.The nematode can be rapidly transmitted to other trees by other species such asMonochamus alternatus.The PWN is native to North America but currently found in the United States, Canada, Japan, Korea, China,Portugal, and Spain (Kwon et al.2011; Lee and Kim 2013).PWD has become a common tree disease in North America,but it does not cause extensive damage to forests in this area due to the long-term coevolution between the host and the PWN (Firmino et al.2017).However, in other regions, PWD causes widespread death of pine trees and huge economic losses.Once a tree is infected, it only takes about 40 d from the onset of symptoms to the death of the tree, and an entire pine forest can be destroyed in only 3–5 years (Zhao 2008).The needles undergo a rapid color change from green to russet or reddish-brown, before they wither and die (Nguyen et al.2017), and these changes can be monitored by remote sensing monitoring (Tetila et al.2020).

For preventing and controlling PWD, damaged trees have been monitored in the field (Wulder et al.2006) using satellite remote sensing (Kim et al.2018) and unmanned aerial vehicle (UAV) remote sensing (Kumar et al.2012; Li et al.2012; Modica et al.2020).Although remote sensing methods have advantages over field surveys, certain limitations still remain, including clouds, weather, and regional conditions.Although an increasing number of satellite images are currently available, the resolution of most of them is not high enough for accurate monitoring; only few expensive satellite sensors provide images with the necessary submeter resolution.In recent years, UAV technology has significantly improved regarding the remote control distance, battery capacity, and image quality, providing an alternative to traditional aerial image acquisition with low resolution and high cost.UAVs typically fly below the clouds at altitudes as low as tens of meters.With the development of better technology and high-resolution, high-performance digital cameras, UAVs can now obtain high-resolution images of small areas.The maximum resolution is generally about 0.02 m, providing fine-scale feature information for reliably interpreting surface conditions.Therefore, UAVs are well suited for the high-precision mapping needs of forestry departments.UAV remote sensing has been widely used for forest resource assessments, monitoring of forest fires, forest diseases, insect pests and forest information extraction(Guillen-Climent et al.2012; Zhang and Kovacs 2012).UAV remote sensing for monitoring diseased trees not only saves material and human resources, but also has advantages over traditional airborne remote sensing for identifying single diseased trees.

Among numerous studies on UAV-based identification of diseased trees around the world, N?si et al.( 2015)developed a high-resolution hyperspectral imaging using a novel hyperspectral imaging sensor on a UAV to collect images of areas infested with spruce bark beetle that provided an overall classification accuracy of healthy and diseased trees of 90%.Onishi and Ise ( 2018) used a commercial UAV to obtain aerial images of forests and performed image segmentation to delineate individual tree crowns using an open-source deep learning framework.They also developed a machine vision system for automatic tree classification with an accuracy of 89% for seven tree species,thus providing a cost-effective tree classification tool for forest researchers and managers.Wyniawskyj et al.( 2019)used satellite images and an image segmentation algorithm based on deep learning for automatic pixel-level classification of a Guatemalan forest.Natesan et al.( 2019) used residual neural networks in a new method for UAV-based tree species classification.The artificial neural network was trained with the UAV images collected over 3 years.In two sets of experiments, the classification accuracy for two tree species was 80% and 51%, respectively.

Although the use of deep learning for UAV remote sensing imagery analysis for tree species classification and diseased tree monitoring has become mainstream (Dash et al.2017), developing a target detection model based on deep learning requires large amounts of data and a long training period.Different models have various advantages regarding the recognition accuracy or detection speed, but no model has performed well in both aspects.A target monitoring model is very complex and difficult to use for single monitoring tasks in forest areas.Although the monitoring methods reported so far have achieved good recognition accuracy, the techniques are complex and require expensive hardware.In addition, the range covered by a UAV in one flight is limited, and there are limitations regarding large-area monitoring and multiple flights in real-world scenarios.

The use of traditional image segmentation algorithms to extract the crown ofindividual trees is now widespread.Nevalainen et al.( 2017) used random forest and multi-layer perceptron algorithms to obtain accuracies of 40–95% for identifying individual trees in the test image.Modica et al.( 2020) proposed a quick and reliable semi-automatic workflow to process multispectral UAV images to detect and extract olive and bergamot tree crowns to obtain vigor maps for precision agriculture applications with classificationF-scores from 0.85 to 0.91 for olive and bergamot.Jing et al.( 2012) improved a multi-scale image segmentation method by first determining the size of the canopy area and filtering the gray-scale image with a Gaussian filter, then using a watershed algorithm to segment the canopy to obtain a highquality map of the canopy.Lee et al.( 2019) used a UAV to collect high-resolution images in PWD-affected areas and used an artificial neural network (ANN) and support vector machine (SVM) to monitor dead and withered pine trees affected by PWD.Also using and GPS data a UAV to collect images, Kim et al.( 2017) created orthophotos of 423 pine trees suspected of having PWD in six areas and improved the monitoring efficiency and found that the PWD infection was not involved.Compared with traditional classification and based on a solid theoretical foundation, object-oriented classification has the advantages oflow hardware requirements and rapid processing, contributing to the object-oriented multi-scale segmentation algorithm widely used in image processing and (Jasiewicz et al.2018; Xie et al.2019; Di Gennaro et al.2020; García-Murillo et al.2020).

In light of these studies, here we applied a multi-scale segmentation algorithm to extract individual tree crowns and classify the discolored trees affected by PWD using object-oriented classification.This approach represents a rapid identification method for PWD-infected trees based on UAV RGB images, laying a foundation for fast and lowcost monitoring of forests for diseases and insect pests.

Materials and methods

Study site

This site in the collective forest area of Dayu county, Jiangxi province (25°19′ N, 114°08′ E) has a typical subtropical humid monsoon climate with abundant rainfall and four distinct seasons.The annual average temperature is 19.6 °C(maximum 41.2 °C, minimum–10 °C), and annual average precipitation is 1500 mm.The forest area is mainly coniferous forests composed ofCunninghamia lanceolataandPinus massoniana.Trees ofP.massonianain the forest area were infected by PWN.After the attack, the trees died, and the PWNs spread rapidly in this area.

UAV flight and photogrammetric data acquisition

The DJI Phantom 4Pro UAV was used to acquire images of the study area and generate photogrammetric products as input data for the segmentation of single tree crowns.The UAV weighs only 1391 g and has a maximum takeoffaltitude of 6000 m, a flight time of 28 min, and a top cruise speed of 50–72 km/h.Thanks to the built-in global navigation satellite system (GNSS) receiver, the take-offand landing are completely automatic.After setting the mission variables (mission area, flight height, overlap, etc.), the waypoints were transmitted to the drone, which automatically executed the task.

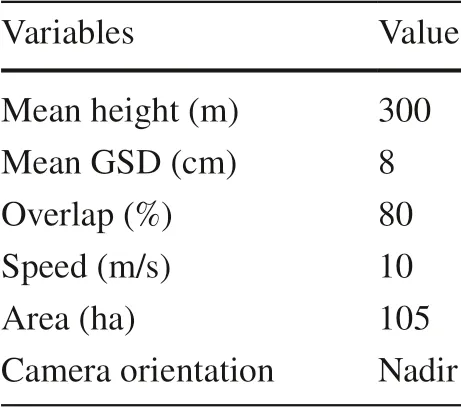

For the RGB flight, the UAV was equipped with a FOV 84° 8.8 mm/24 mm lens, with a 1-inch CMOS sensor of 20 MP (5472 × 3648), and a sensor size of 12.8 × 9.6 mm.The flight was planned using the DJI GO4 app, considering a photogrammetric overlap between images of 80% in the lateral and longitudinal direction, an altitude of 300 m, a speed of 10 m/s, and an average ground sample distance(GSD) of about 8 cm.Table 1 shows the characteristics of the photogrammetric flights.The data acquisition is a key step of the photogrammetric process since the quality of the final result depends on it.

Table 1 UAV flight variables

Flights were done in the midday in August 2019 when there was sufficient light, low wind, and minimum shadows for optimum UAV image quality.

Photogrammetric data processing

The aerial image acquisitions were aimed to produce the RGB orthomosaics.All the UAV data were post-processed using the structure from motion (SfM) approach (Turner et al.2012).The algorithms, which now are implemented in several commercial software, allowed us to rapidly and accurately align the images, compute a three-dimensional dense point cloud, then reconstruct a textured mesh of the object of study.

We processed images using Pix4D ( 2016) mapper software (v4.4.12, Pix4D, Prilly, Switzerland) to produce an ortho-image by mosaicking images from the drone and correcting for the topographic and camera distortions.After synthesizing the single image with standard overlap degree taken by UAV into the DOM of the study area, the diseased area as the research scope of this article was cut out (Fig.1).

Fig.1 The study area in Dayu county

Multi-scale segmentation

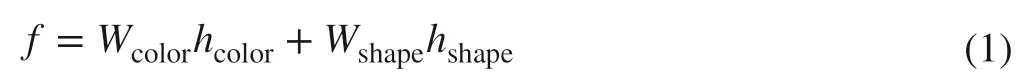

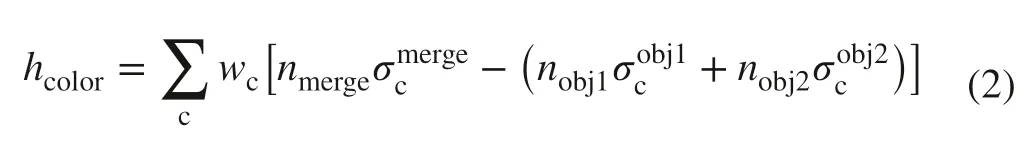

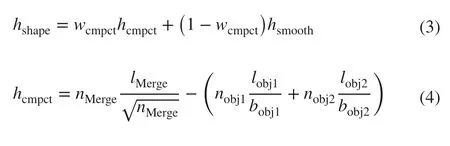

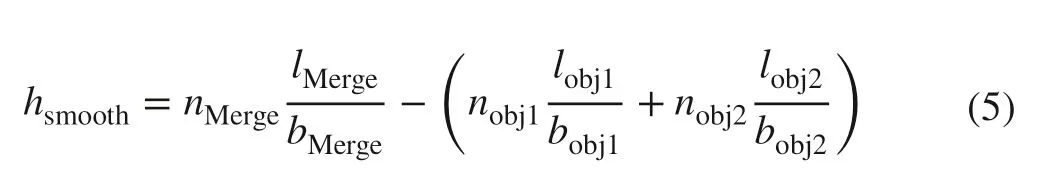

After the image preprocessing, we adopted the widely used multiresolution segmentation (MRS) algorithm (Baatz and Sch?pe 2000), implemented in the eCognition software (v9.0,Trimble Germany GmbH, Munich, Germany) to produce semantically meaningful image objects.The method is well suited for high-resolution remote sensing images.This algorithm is based on a bottom-up region-merging method and mainly controlled by three key parameters: scale parameter,shape, and compactness.Multi-scale segmentation produces image objects with similar attributes and arbitrary scales.In general, a remote sensing image contains multiple features.In comparison, the traditional pixel-based method only considers the spectral features of single-pixel, therefore thus restricting the uses of classification features and producing the “salt and pepper” effects in the results (Robson et al.2015; Guo et al.2021).In contrast, multi-scale segmentation generates meaningful objects at any scale with maximum homogeneity and minimum heterogeneity.Multi-scale segmentation is a bottomup hierarchical merging process.It uses a pixel as the central point, merges adjacent pixels or smaller segments, and generates a homogeneous object by aggregating the small segments into larger objects (Fu et al.2019).The heterogeneity of each merged object must be smaller than a given threshold.Each merged object has maximum homogeneity between the pixels within an object and minimum heterogeneity between objects.The homogeneity (f) of an object is defined as

whereWcoloris the spectral information weight,hcoloris the spectral homogeneity value,Wshapeis the shape information weight, andhshapeis the shape homogeneity value.

The spectral homogeneity valuehcoloris determined by the standard deviation of different bands.

wherenrepresents the number of pixels,σcrepresents the standard deviation of pixels within the element.

The shape homogeneity valuehshapeis composed of smoothnesshsmoothand tightnesshcmpct.

wherelrepresents the perimeter of the object polygon,nrepresents the pixel number of the object,brepresents the minimum side length of the polygon with the same area in the object.

In the MRS process, the scale, shape, and compactness parameters have to be determined using multiple tests.The scale parameter is an abstract term that in eCognition Developer software determines the maximum allowed heterogeneity for the resulting image objects and determines the size of the image objects, the segmentation quality, and the accuracy ofinformation extraction (Karydas 2019).The larger the scale parameter, the larger is the segmented object, and the smaller the number of patches, and vice versa (Carleer et al.2004; Happ et al.2010; Stefanski et al.2013).The criteria to evaluate the segmentation scale are the maximum internal homogeneity of the segmented objects and the maximum heterogeneity between the objects.Therefore,the larger the segmentation scale, the smaller is the computational complexity.

Determination of optimal segmentation scale

Scale is a dimensionless and ambiguous concept in eCognition Developer software.Among the three parameters (scale,shape, and compactness), the scale parameter has the largest range and the greatest significant influence on the segmentation results.Different scale settings will lead to very different segmentation results, affecting the final classification results and potentially reducing the accuracy of object extraction.

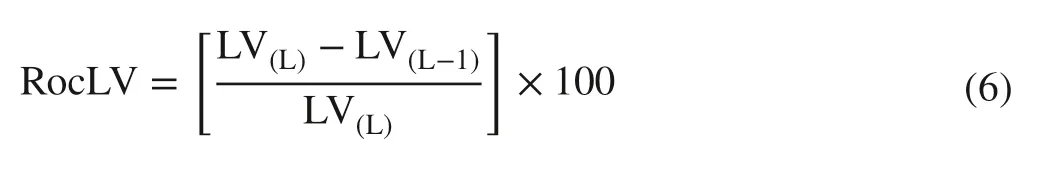

The optimal scale parameter is commonly determined using the estimation of scale parameter (ESP2) plugin in the eCognition Developer software.This tool was designed by Drǎgu? et al.( 2010) as a plugin to calculate the optimal scale parameter for segmentation.However, a qualitative evaluation of the segmentation scale is required.The ESP tool calculates the local variance (LV) of all the image objects to evaluate the segmentation results.The rate of change of the LV (Roc-LV) is used to evaluate the optimal segmentation scale of a given object.The maximum value of the LV produces a peak, and the optimal segmentation scale corresponds to the peak of a given ground object.Different objects have different optimal scales.Since an image contains multiple objects, multiple peaks are obtained, resulting in multiple optimal segmentation scales depending on the object ofinterest.The Roc-LV is calculated as follows:

where LV(L)is the target layer, that is the local homogeneity variance of theLlayer and LV(L–1)is the homogenous local variance of the object layer at the next layer of the target layer.

The starting scale was 10, and the increment was 1 to obtain the most optimal segmentation scale.The number of loops was 100.A bottom-up iterative method was used, and the process was repeated 100 times to obtain the best scale.The calculation result of the ESP tool was output as a text file to generate a line graph.The shape and compactness parameters were set to 0.5.

Object-oriented classification

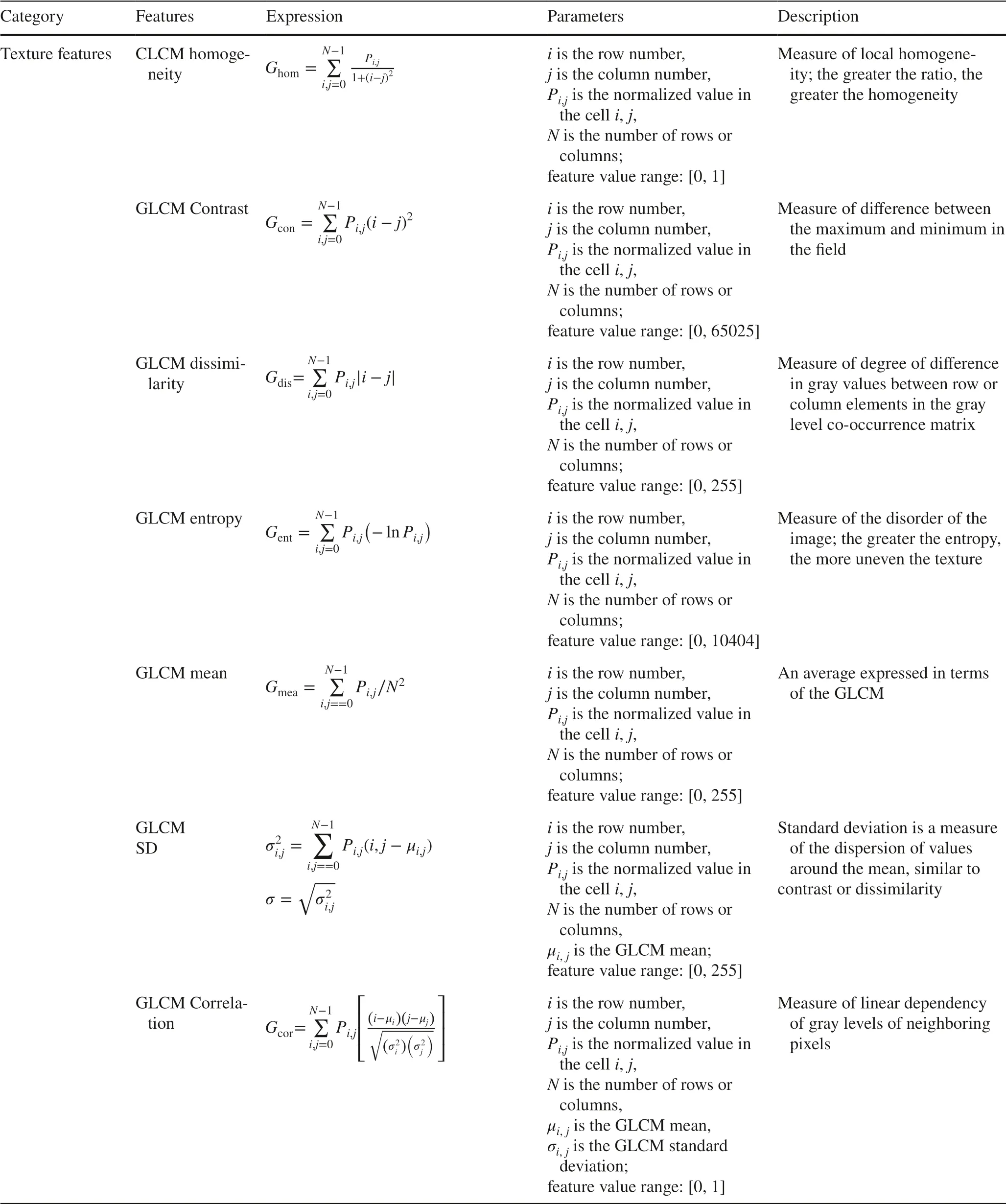

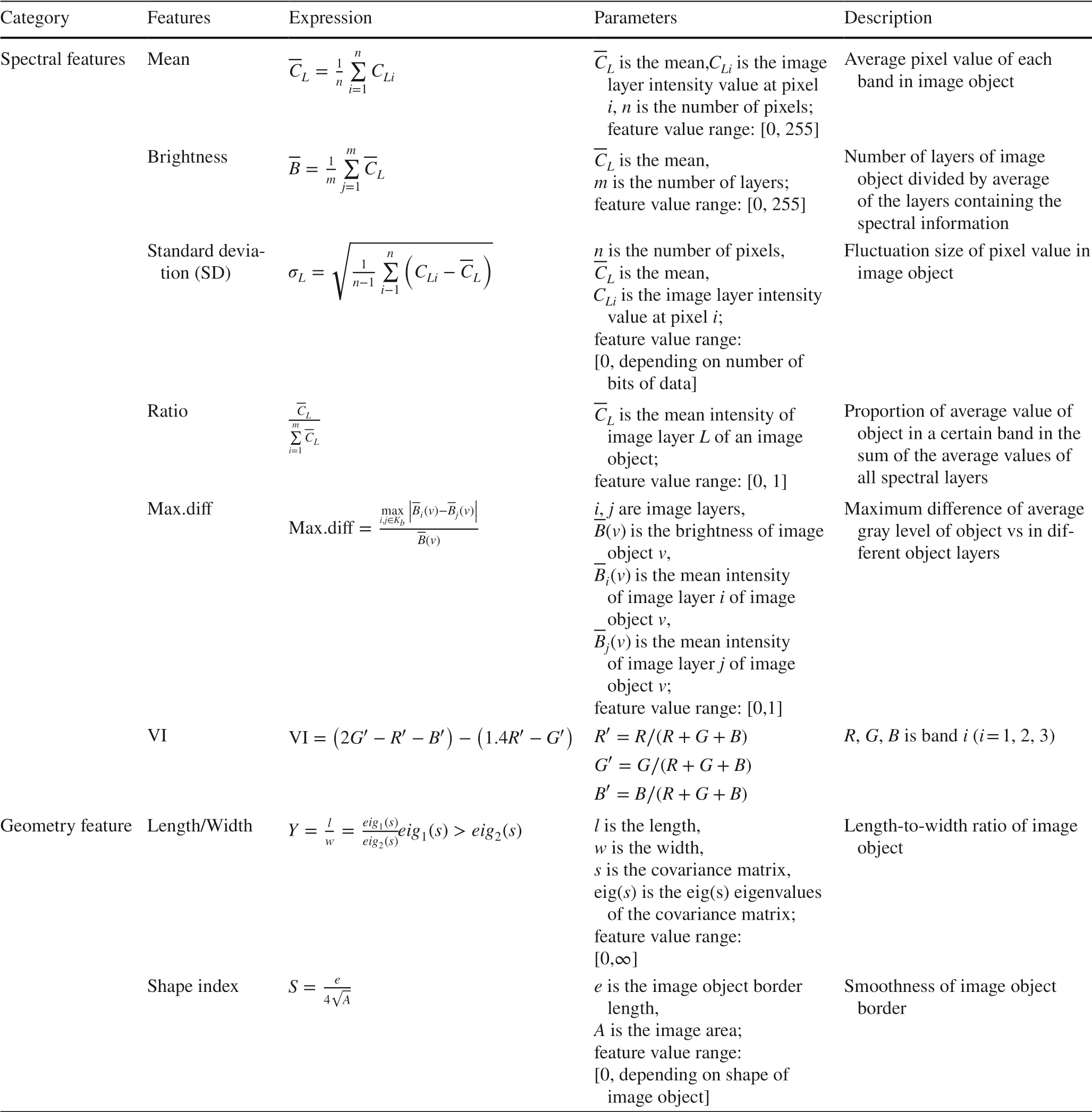

After the segmentation, the image objects were clustered into different categories, and the next step was object classification.Object-based image analysis (OBIA) was then introduced into the sample pattern classification method.The OBIA is a methodological framework aiming at extracting readily usable objects from images and meantime combining image processing and geographic information system(GIS) functionalities for an integrative utilization of spectral and contextual information (Blaschke 2010).Segments of OBIA are generated by the criteria of homogeneity in one or more dimensions (of a feature space), and then additional spectral information (e.g., mean, minimum, maximum, and median values of per band) together with spatial information(e.g., image texture, contextual information, and geometric features) can be assigned to objects (Hay and Castilla 2008).Since there were no buildings, rivers, and lakes in the study area, the images were divided into three categories: tree crown, trees discolored by PWD, and forest gaps.Spectral,texture, and geometry features were selected in the feature space for classification (Table 2).

Table 2 (continued)

Table 2 Characteristics of the 21 features used for classification

Accuracy analysis

Accuracy was evaluated in terms of correspondence between the reference crowns and the segmented ones.The evaluation methodology mainly includes the producer’s and user’s accuracy and F1 score.Particularly, the producer’s accuracy(PA) and the user’s accuracy (UA) are calculated using the following equations:

where PA is the producer’s accuracy, UA is the user’s accuracy,Nis the number of correctly classified, RC′ is the number of reference crowns, and DC′is the number of defined crowns.The relationship between UA and PA is described by the F1 score, from the following equation:

The situation shown in Fig.2 a was considered as a correct segmentation of the crown, while the relationships of reference and segmented crowns in Fig.2 b-d were considered as incorrect segmentation.The segmented crowns were counted according to visual evaluation; all the incorrect segmentation is a misclassification.The omission and commission errors can describe more precisely the goodness of the segmentation.As illustrated by Ke and Quackenbush( 2011), we took into consideration four possible cases of the relationship between the reference data set and the segmented one: (1) complete, (2) simple omission, (3) omission through under-segmentation, and (4) commission through over-segmentation.

Fig.2 Possible cases of the relationship between reference crowns (red border) and segmented crowns (blue border).a Complete.b Simple omission.c Omission through under-segmentation.d Commission through over-segmentation

Results

Optimal segmentation scale

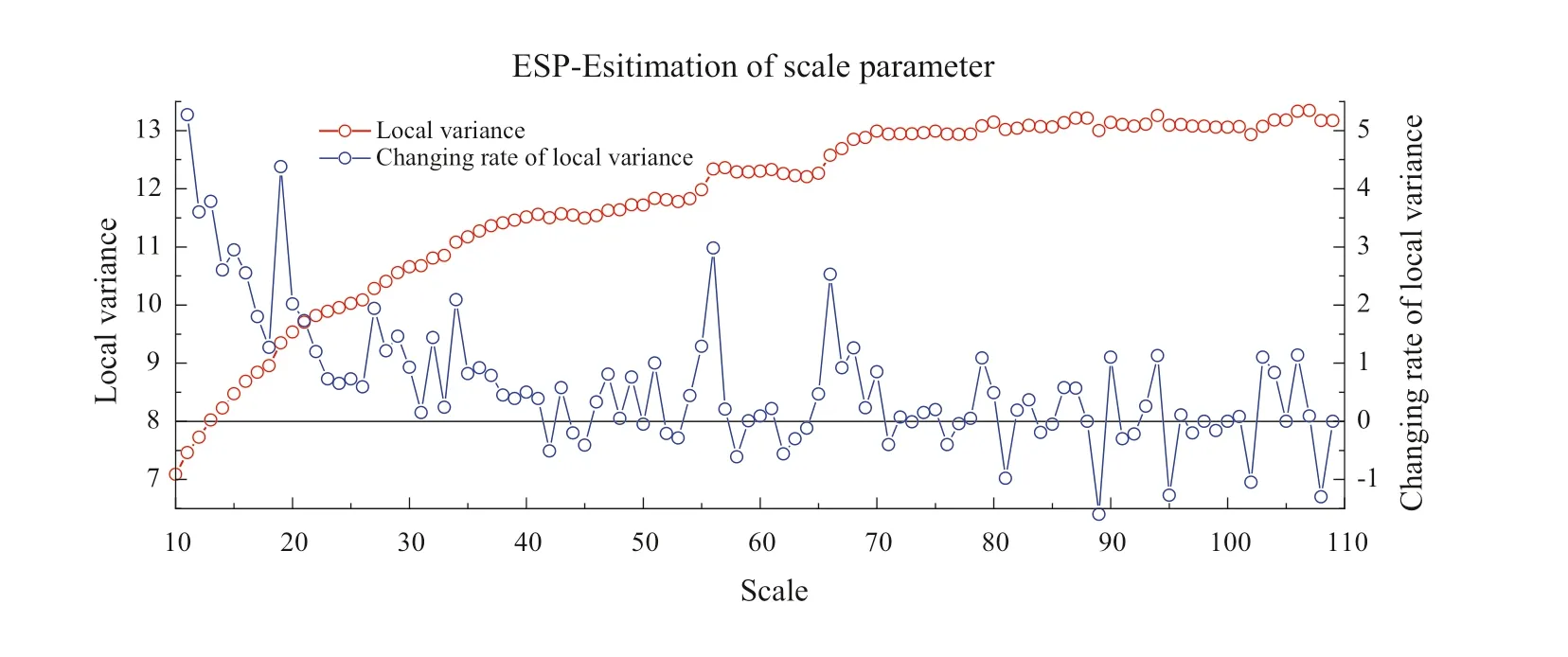

The optimal segmentation scale results obtained from the ESP2 tool are shown in Fig.3.Thex-axis shows the scale parameter, they-axis shows the local variance (LV) and thez-axis shows the rate of local variance (Roc-LV).The peak of the Roc-LV indicates that this scale parameter is the optimum segmentation scale of a certain ground object.

Fig.3 Line chart of the optimal segmentation scale

The peak values of the Roc-LV occurred at scales of 19, 27, 34, 56, 66, and 79.These scales can be used as the optimal segmentation scale for the tree canopy objects in this image.We then used the optimal segmentation scales obtained from the ESP2 and set the shape and compactness parameters to 0.5.The optimal scale is different for different objects.In this case, we selected the optimal canopy segmentation scale by visual interpretation.

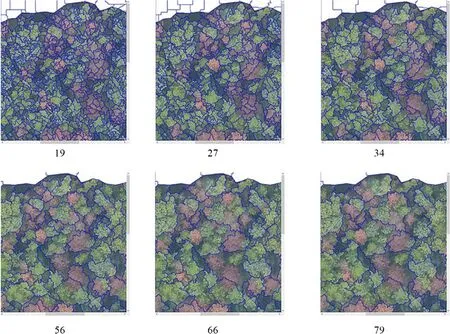

As shown in Fig.4, the tree canopy is over-segmented when the scale is 19, 27, and 34.At a scale of 56, the tree canopy is well delineated, and at scales of 66 and 79, the canopy of the large trees is not accurately segmented.Therefore, the optimal segmentation scale for the tree canopy is 56 when the shape and compactness parameters are 0.5.

Fig.4 Comparison of tree crowns at different segmentation scales.The optimum segmentation scales are 19, 27, 34, 56, 66, and 79

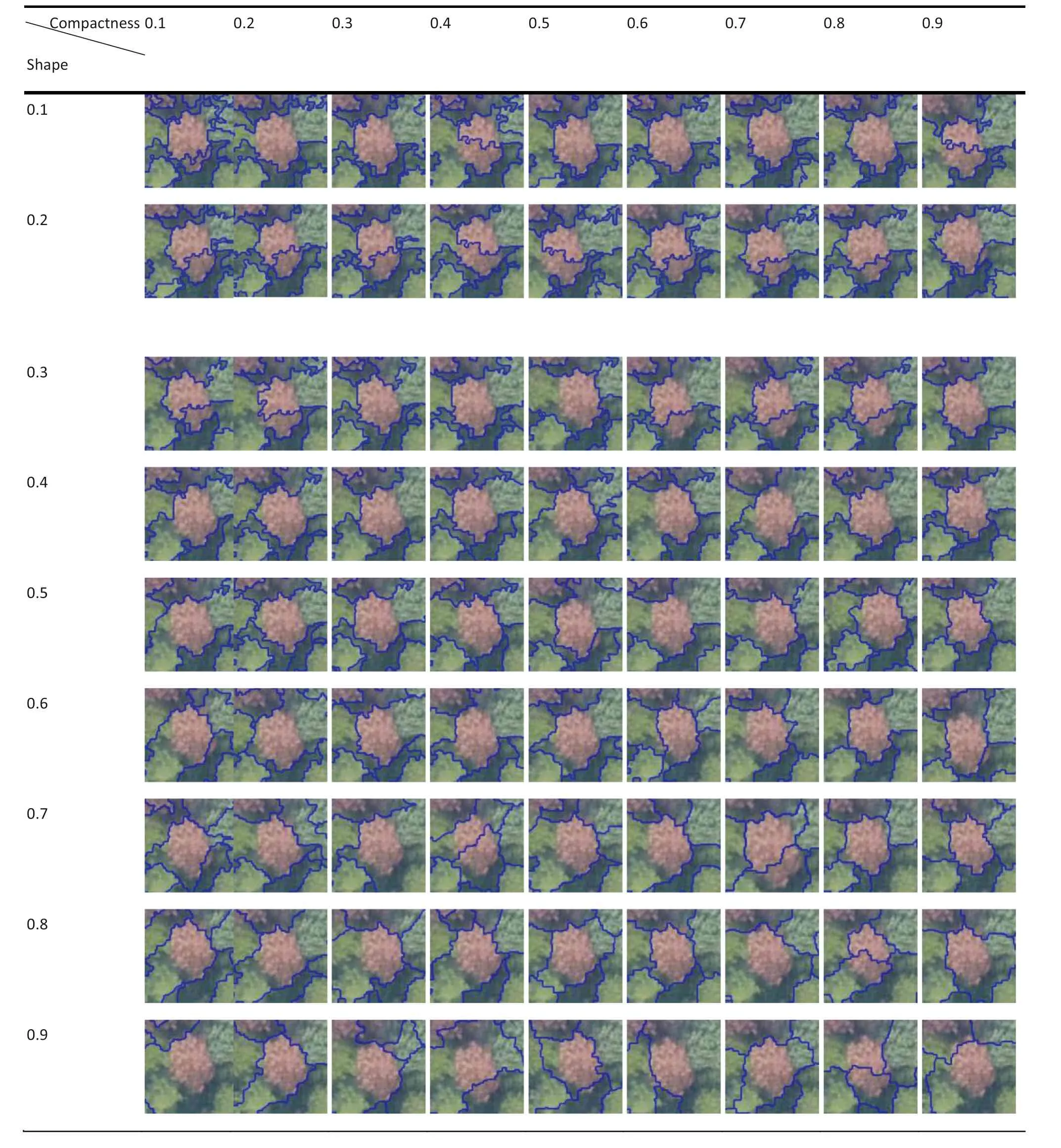

Determination of shape and compactness

We conducted tests to evaluate different shape and compactness values at a segmentation scale of 56 because multiple tree crowns were not delineated and consisted of one object.The range of the shape and compactness was 0.1-0.9, and the interval was 0.1.One parameter was held constant, and the other parameter was adjusted.The optimal parameter combination was determined by evaluating the contrast and segmentation performance visually.As shown in Table 3, the optimal segmentation results were obtained when the scale was 56, shape was 0.5, and compactness was 0.8.

Feature space optimization and final results

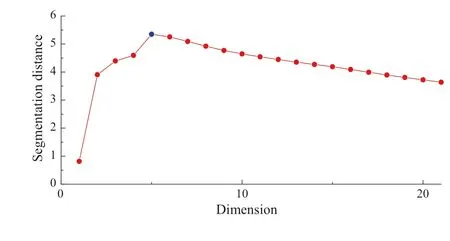

We collected training samples for the three classes (tree crown, trees discolored by PWD, and forest gaps).Generally, the number of samples should be 1/5 to 1/3 of the number ofimage objects in the class.The classification accuracy typically increases with the number ofimage features.However, too many features result in redundancy.The feature space optimization tool can find the optimum feature combination.We selected the aforementioned 21 features for optimization in the feature space optimization tool.The number of selected features is the maximum dimension.

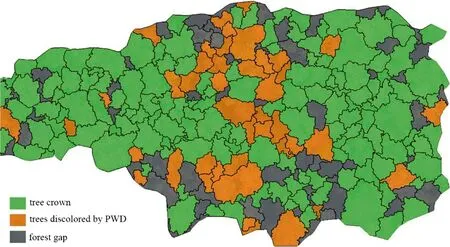

Figure 5 shows that the separation distance reaches the maximum at five features, and the optimal features include ratio G, ratio B, ratio R, max.diff, and VI.We used these features and classified the tree crown, trees discolored by PWD, and forest gaps using the training samples.The result is shown in Fig.6

Fig.6 Identification results for trees discolored by pine wilt disease (PWD) using the training samples and the features shown in Fig.5

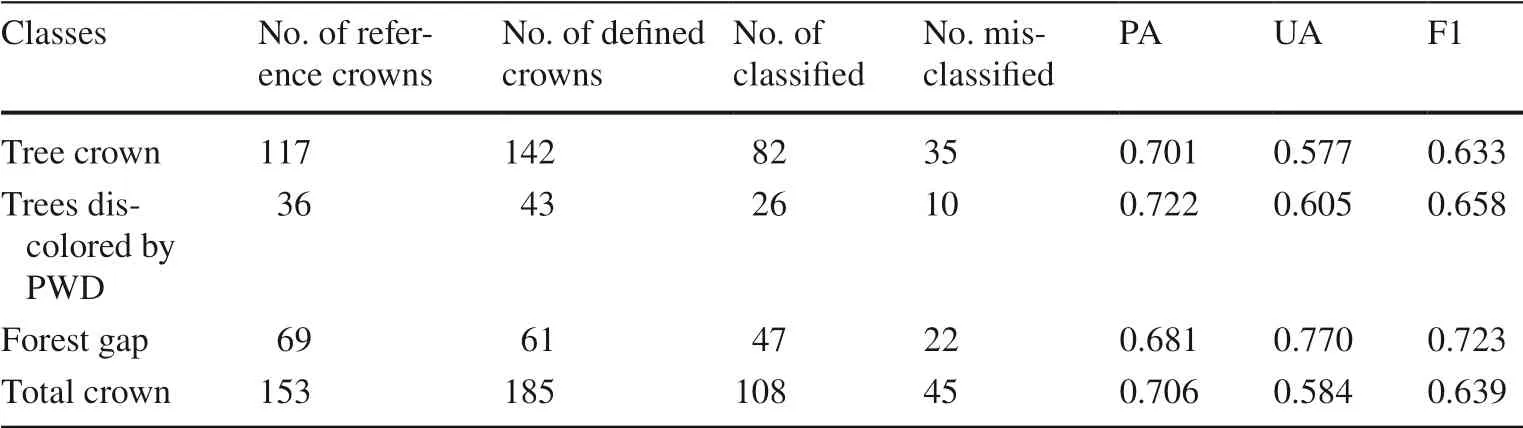

After the classification, each classified object was counted according to the previous counting method.The accuracy results are listed in Table 4.The PA, UA, and F1 values of the tree crown class were 0.706, 0.584, and 0.639, respectively.The PAs of the tree crown and trees discolored by PWD classes was 0.70, and that of the forest gap class was close to 0.70.The UAs of the tree crown and trees discolored by PWD classes were 0.577 and 0.605,respectively, and that of the forest gap was 0.770.The F1 values for the three classes were 0.633, 0.658, and 0.723,respectively, which were acceptable.

Table 3 Segmentation results for different combinations of shape and compactness

Table 4 Results of visual evaluation

Discussion

Identifying trees discolored by PWD is a crucial aspect of monitoring forest for PWD and is usually carried out over relatively large areas.Rapid monitoring and detection to reduce the impact of diseases on the forest are the goals of forest pest monitoring.UAV images have sufficient resolution for extracting individual tree crowns (Grznárová et al.2019).In this study, image segmentation and object-oriented classification were used to identify individual trees discolored by PWD.The proposed method meets the needs of rapid forest monitoring.

The total number of segmented crowns was larger than the number of reference crowns, which might be due to the high image resolution and the tree crown details.Overlapping tree crowns were divided into separate trees, but some f laws were observed in the visual evaluation.The determination of the segmentation scale is highly subjective.The relatively low accuracy was attributed to omissions caused by under-segmentation or over-segmentation, which are incorrect segmentations.Similarly, the number of forest gap objects was also less than the number of reference gaps; some forest gaps were small and included tree branches and were thus identified as canopy areas.The F1 score of the trees discolored by PWD was 0.658, which was similar to the PA and UA obtained by Ke (2011),but lower than the accuracy of single crown extraction reported by Mohan et al.( 2017) and Qiu et al.( 2020),who used a canopy height model (CHM) and very highresolution (VHR) images.The optimal features obtained from the feature space optimization tool in this study were all spectral features, indicating that the color of the tree affected by PWD is an important feature in the classification.The geometry and texture features have a very small effect on the final classification result, perhaps because the discoloration of the trees from PWD has a relatively small effect on the geometry and texture in the image or the RGB image contains insufficient information; this point needs further study.The final classification requires fewer features, so the amount of calculation required is small, and the image processing speed is faster, definite advantages of PWD monitoring using RGB images.After feature space

optimization, certain samples are selected and classified.Based on the sample pattern classification method, the classification effect is good.The results of correct segmentation in Fig.2 are basically correct after the training of this sample.However, due to the influence of segmentation results, over-segmentation and under-segmentation objects cannot be well classified.Incorrect segmentation results in low values for accuracy estimators F1, UA and PA.In eCognition software, incorrectly classified objects can be assigned to the correct categories by manual classification to improve the classification accuracy.However, since the automation of forest monitoring is the goal, manual editing to improve the classification accuracy has little practical value; thus, we did not use this method in this study.

Fig.5 Plot of the separation distance and dimension

Object-oriented multi-scale segmentation meets the needs of forest monitoring and has been widely used for satellite remote sensing classification and regional tree species classification in forestry (Xie et al.2019).No image processing algorithms exist for extracting individual tree crowns from UAV RGB images, and it is necessary to examine whether high-resolution images are suitable for the extraction ofindividual tree crowns.It is difficult to extract structural information from RGB images, such as the tree height and the diameter at breast height (DBH).Forest site conditions are complex and diverse, and the slope, canopy density, mixed species, and shadow influence image quality.Since this study focused on the application of the method, the influences of these factors were not considered here.However,more in-depth research is needed to ensure that the method has practical applicability.

As mentioned above, there are limitations to evaluating the segmentation quality visually.More comprehensive consideration is needed to assess the accuracy ofindividual crown delineation.For example, the circumference of the segmented crown may be larger than the actual circumference, or the same crown may be over-segmented.However,the object types can be accurately classified in an over-segmented image.The evaluation of the segmentation results requires a more appropriate method rather than simply performing statistical analysis.

Conclusions

In the present study, we introduced an object-oriented multiscale segmentation method, based on UAV images collected in the forest area in Dayu County, Jiangxi Province,to extract the tree canopies and identify the trees infected by PWD.Feature space optimization was used to determine the optimal feature combination for classification.The optimum segmentation results were obtained when the scale, shape,and compactness values were 56, 0.5, and 0.8, respectively.And finally, 26 trees discolored by PWD were accurately extracted (PA: 0.722, UA: 0.605, F1: 0.658).There were no significant classification errors in the results, and the low accuracy was attributed to the low number of objects counts caused by incorrect segmentation.This study demonstrated that trees discolored by PWD could be identified in the UAV RGB images using multi-scale segmentation and object-oriented classification.This method undoubtedly can improve the efficiency of PWD monitoring and support conservation efforts in forests in southern China.

FundingThe work was supported by the National Technology Extension Fund of Forestry ([2019]06), the National Natural Science Foundation of China (No.31870620).

Declarations

Conflict ofinterestThe authors declared that there is no conf lict ofinterest.

Open AccessThis article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source,provide a link to the Creative Commons licence, and indicate if changes were made.The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material.If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.To view a copy of this licence, visit http:// creat iveco mmons.org/ licen ses/ by/4.0/.

Journal of Forestry Research2022年4期

Journal of Forestry Research2022年4期

- Journal of Forestry Research的其它文章

- Journal of Forestry Research

- Reversibly photochromic wood constructed by depositing microencapsulated/polydimethylsiloxane composite coating

- Surveillance of pine wilt disease by high resolution satellite

- Adaptation of pine wood nematode, Bursap helenchus xylophilus,early in its interaction with two P inus species that differ in resistance

- Transcriptome analysis shows nicotinamide seed treatment alters expression of genes involved in defense and epigenetic processes in roots of seedlings of Picea abies

- Effects of enrichmemt planting with native tree species on bacterial community structure and potential impact on Eucalyptus plantations in southern China