Towards the Design of Ethics Aware Systems for the Internet of Things

Sahil Sholla,Roohie Naaz Mir,Mohammad Ahsan Chishti

Computer Science and Engineering Department,National Institute of Technology Srinagar,Hazratbal 190006,India

Abstract: The Internet of Things promises to offer numerous societal benefits by providing a spectrum of user applications.However,ethical ramifications of adopting such pervasive technology on a society-wide scale have not been adequately considered.Smart things endowed with artificial intelligence may carry out decisions that entail ethical consequences.It is assumed that the functioning of a smart device does not involve any ethical responsibility vis-a-vis its application context.Such a perspective may precipitate situations that endanger essential human values or cause physical or emotional harm.Therefore,it is necessary to consider the design of ethics within intelligent systems to safeguard human interests.In order to address these concerns,we propose a novel method based on Boolean algebra that enables a machine to exhibit varying ethical behaviour by employing the concept of ethics categories and ethics modes.Such enhancement of smart things offers a way to design ethically compliant smart devices and paves way for human friendly technology ecosystems.

Keywords: Boolean; ethics aware; ethical design; Internet of Things

I.INTRODUCTION

The Internet of Things (IoT) is a recent networking paradigm according to which apart from computers,smart phones and other high end devices,even ordinary things are connected to the global internet.This has been made possible due to the recent advances in miniaturization and communication technologies.Ordinary things like keys,furniture,buildings,home appliances,vehicles and numerous other devices are embedded with communication and processing capabilities to realise the vision of IoT.The idea is to achieve ubiquitous connectivity of devices that are able to sense their environment,upload the sensed data to a cloud where it is processed to provide novel user applications.IoT vision offers numerous promising applications that have the ability to transform the way we interact with the physical world.Application domains include smart transportation,smart grid,smart home,smart healthcare etc.In view of its strategic importance,IoT has promptly become an active area of research globally.

However,IoT applications also introduce some challenges that need to be addressed for a meaningful realisation of the promised benefits.Sensors embedded in home appliances,TVs,laptops,clothes,curtains etc.would have access to a plethora of confidential information.With the capability to sense environment,process data and exchange information,smart devices may endanger the privacy of individu-als.Although concerns about security aspects of IoT are well known [1],the dimension of ethics remains largely unexplored.

Usually design of a machine,smart device or software only considers its functional aspects whilst attention is not paid to the moral,ethical or social requirements that may be necessary for its meaningful application.Such a stance toward machine functioning may precipitate situations that endanger core human values,including physical or mental harm and threat to life.On several occasions,the technology has already breached ethical boundaries of people,for instance perpetuation of crimes against women,pricing bias in online market,usurping of jobs by automation,online exploitation of children,ethical concerns of smart healthcare,autonomous cars and killer robots [2].As modern technologies increasingly dovetail into our lives,such concerns become apparent [3],[4].

By utilising an array of smart things that are able to sense information of interest and utilise Artificial Intelligence (AI) to provide a variety of application services,the decisions involved in IoT may have ethical connotations [5].For instance,smart devices could sense surroundings and exchange data with other devices or people that may be ethically unacceptable.Although AI is hailed as a prominent enabling technology for the IoT,concerns about its ethical impact also emerge [5].With the increased use of AI algorithms for cognitive tasks,the systems necessitate ethical dimension [6].Due to such apprehensions,Rosalind Picard,director of the Affective Computing Group at MIT remarked,‘The greater the freedom of a machine,the more it will need moral standards’[7].Although there is a tendency to assume that machines embedded with AI will promote equality and justice,absence of a framework for ethics may endanger fundamental human rights [8].Thus,considering ethics within the design of smart technologies is critical to safeguard human interests.

Generally,application providers tend to be overzealous in providing smart services to people to secure economic benefit,however in the process downplay concerns of ethical compliance [9],[10].Over thirty years of research into societal implications of technology suggests a strong relationship between that two that cannot be overlooked [11]but the fragmentation in approaches makes for a confusing debate.This article brings some structure to the debate by analyzing a corpus of 51 publications and mapping their variation.The analysis shows that publications differ in their emphasis on (1.People should be assured that they have control over the behaviour of smart devices else it could lead to social disarray which in turn would adversely affect the growth of IoT.As smart devices increasing integrate with human society,there arises a need for a mechanism to facilitate smooth interaction between devices and people.

However,incorporating ethics within machines is a challenging task due to several reasons.Firstly,the field is marred with difference of opinion among philosophers,ethicists and religious thinkers.Secondly,the concepts are not expressed in well defined mathematical forms to facilitate computation [12].Thirdly,its interdisciplinary nature that spans across such diverse areas as philosophy,psychology,neuroscience and artificial intelligence [13].A detailed discussion on the challenges encountered in the design of machines endowed with morality can be found in [12]-[14]controlling electrical supplies,and driving trains.Soon,service robots will be taking care of the elderly in their homes,and military robots will have their own targeting and firing protocols.Colin Allen and Wendell Wallach argue that as robots take on more and more responsibility,they must be programmed with moral decision-making abilities,for our own safety.Taking a fast paced tour through the latest thinking about philosophical ethics and artif icial intelligence,the authors argue that even if full moral agency for machines is a long way off,it is already necessary to start building a kind of functional morality,in which artificial moral agents have some basic ethical sensitivity.But the standard ethical theories don’t seem adequate and more socially engaged and engaging robots will be needed.As the authors show,the quest to build machines that are capable of telling right from wrong has begun.Moral Machines is the first book to examine the challenge of building artificial moral agents,probing deeply into the nature of human decision making and ethics.--From publisher description.Why machine morality? -- Engineering morality -- Does humanity want computers making moral decisions? --Can (ro.Notwithstanding the difficulty,it is necessary to address the question of ethical conformity of smart devices to ensure widespread adoption of IoT paradigm.

In order to address the challenge,we propose a method based on Boolean algebra to implement ethics within smart devices of the IoT.We adopt ontology agnostic approach towards ethics implementation in smart devices as described in [15].Ontology agnostic approach refers to a design methodology that seeks to develop a mechanism to implement a given set of ethics in smart devices,regardless of beliefs,philosophy,culture etc.that motivate them.By means of such incorporation ordinary systems transform into ethics-aware systems i.e.systems aware of context specific ethical responsibilities and capable of exhibiting them.The collection of moral,ethical,legal,religious,cultural or regional parameters applicable to a machine functioning context is referred to as Ethics of Operation (EOP).The underlying conceptual framework utilised to facilitate implementation of ethics in machines is outlined in [2].

The proposed method implements ethics applicable to a machine context using five ethics categories viz.Forbidden,Disliked,Permissible,Recommended and Obligatory(FDPRO).Also,the machine uses several ethics modes in order to decide whether a given scenario is ethically acceptable.The proposed method to implement ethics relevant to the context of a machine works in the following manner: Ethical requirements applicable in a given machine context are expressed in terms of propositional statements that may be related to input or output.Using these propositions various scenarios that may result between a machine and its context are identified and their ethical desirability specified using the five ethics categories.Finally,ethics modes are used to decide ethical conformity of a given scenario.

To the best of our knowledge,this work is the first effort to consider implementation of the five ethics categories (FDPRO) from engineering perspective.It uses the novel concept of ethics mode to enable a machine exhibit varying ethical behaviour based on context.Moreover,authors suggest a set of simulation results to validate the effectiveness of the proposed method.This work offers a viable approach to implement ethics in a flexible,scalable and convenient manner and realise the concept of ethics layer for smart devices as suggested by [15].The method of implementing ethics outlined in this paper promises numerous applications e.g.ethical gadgets,ethical toys,ethical cars,ethical homes etc.It offers a way to design socially responsible smart devices that transcend functional requirements and imbue ethics as well.

II.RELATED WORK

The question of ethical design within AI systems is becoming one of the leading research area from the past few years [16].In a recent communiqué,world renowned internet pioneer and inventor of TCP/IP,Vinton Cerf articulated the need of designing models for ethical use of IoT [17].In 2016,standard university launched AI100,a hundred year research program to explore design of ethics for AI [18].However,the project does not yet offer any solutions but seeks to kindle a discussion on the topic among ethicists,scientists,policy makers,industry leaders and people in general[19].IEEE also launched a similar initiative in 2017,called the Ethically Aligned Design(EAD) to focus on the ethical design for AI and autonomous systems [20].

A recent work that seeks to design ethics for smart devices of IoT is a policy based approach by Baldini [21],but the authors view ethics in a very restricted sense,limited to privacy profiles offered to citizens against some payment.Authors in [15]propose Ethics Aware Object Oriented Smart City Architecture (EOSCA) that represents the first smart city architecture to address the question of ethical compliance of smart devices.EOSCA offers a conceptual framework that dedicates a separate layer called ethics layer in the protocol stack to account for ethical behaviour of smart devices.The authors suggest a tabular approach for implementing EOP within devices.However,the method is only able to represent a static ethical response limited to forbidden,permissible and obligatory scenarios.Also,ethical requirements are indicated within tables in a tedious manner that is not scalable.

Traditionally,the field of study that explores the possibility of imbuing machines with ethics is known as roboethics,also called machine ethics or machine morality or friendly AI [14]controlling electrical supplies,and driving trains.Soon,service robots will be taking care of the elderly in their homes,and military robots will have their own targeting and firing protocols.Colin Allen and Wendell Wallach argue that as robots take on more and more responsibility,they must be programmed with moral decision-making abilities,for our own safety.Taking a fast paced tour through the latest thinking about philosophical ethics and artificial intelligence,the authors argue that even if full moral agency for machines is a long way off,it is already necessary to start building a kind of functional morality,in which artificial moral agents have some basic ethical sensitivity.But the standard ethical theories don’t seem adequate and more socially engaged and engaging robots will be needed.As the authors show,the quest to build machines that are capable of telling right from wrong has begun.Moral Machines is the first book to examine the challenge of building artificial moral agents,probing deeply into the nature of human decision making and ethics.--From publisher description.Why machine morality? -- Engineering morality --Does humanity want computers making moral decisions? -- Can (ro.The aim is to design robots capable of full-fledged moral reasoning,referred to as Artificial Moral Agents (AMAs).Researchers have suggested some approaches that may be utilised to realise machine morality.One of earliest attempts to implement ethics in machines is represented by a software called JEREMY that uses a utilitarian approach to measure utility of an actions depending on intensity,duration and probability [22].The program asks the user to enter an action’s description and an estimate of pleasure and likelihood of each action.This information is used to calculate net pleasure corresponding to each action and the program outputs actions that achieve the maximum pleasure.

Similarly,Anderson et al.developed a biomedical ethical advisor called MedEthEx[23].It uses three prima facie duties of beneficence,nonmaleficence and respect to analyse the ethical dilemma of a patient refusing medicine.Values are associated with duties to enable a machine decide ethical preferences of a various actions that could be taken by a medical professional.The approach however is confined to a very specific use case that may not be easily extended to implement ethics in other domains.

A logic based approach to design ethical robots is proposed by the authors in [24].A deontic logic based system is developed to infer negative or positive outcome,denoted by negative and positive sign,respectively.Also,increase in negative or positive outcomes,denoted by an exclamation mark,is also measured.Among the set of possible actions that could be taken,the robot accesses a proof to ascertain if the action is permissible.However,the authors acknowledge that the approach lacks clarity and generalisation.

McLaren employs casuistry to design models for ethical reasoning: Truth-Teller and SIROCCO [25].Truth-Teller compares a given problem with well known similar cases based on their similarity and reasons to what extent a similar conclusion may be applicable.SIROCCO seeks to provide relevant information pertaining to a given case to assist a human user in ethical decision making.A mapping algorithm retrieves codes and case relevant to a problem and ranks them according to a similarity index.

Authors in [26]propose neuro-science inspired model for human cognition called LIDA.The work attempts to model Global Workspace Theory (GWT) of consciousness,a highly regarded model of human cognition.Nodes with positive or negative values are used to indicate emotions or feelings involved in a decision.LIDA agent updates representation of its environment by sensing and then chooses the most important and urgent task.A learning mechanism is used to select the appropriate behaviour among possible action schemes based on affective and rational parameters.However,authors admit that the model suffers due to the problem of scalability and raises a host of additional questions.

Cervantes et al.seek to design a model that attempts to imitate human decision making process [27].The model has architectural components corresponding to different regions of the human brain e.g.amygdala,cortex,hippocampus etc.The agent evaluates all possible options using a set of moral and ethical rules,weighted according to the extent to which they can be broken.Also,variables are used to represent emotions,desires and experiences of an agent.Based on the weight of contributing parameters,an aggregate value is calculated that indicates the best the best option available for the agent.

Notwithstanding such endeavours,the field is largely nascent and theoretical in nature[28].Moreover,the vision of roboethics to design agents with the capability of full-blown moral decision making may not be necessary for smart things of the IoT world.It is plausible to focus only on a subset of ethical parameters relevant to a device context to ensure ethically compliant behaviour than to identify principles of a universal moral theory [15].

III.PROPOSED BOOLEAN METHOD

We identify propositional variables,related to input or output of the system,that sufficiently capture EOP of the device.These propositions are used to identify various scenarios that could occur in the interaction between a device and its surroundings.In order to indicate ethical desirability of various scenarios we define five ethics categories:

Forbidden:These scenarios are incompatible with EOP and as such are prohibited for the machine.

Disliked: This category represents those scenarios that are considered undesirable according to EOP,however not to the extent of being forbidden.Such scenarios may or may not be allowed depending on the context (ethics mode).

Permissible:This category includes scenarios that are neither undesired nor preferred as per EOP.As in the disliked case,these scenarios may or may not be allowed depending on the application context.

Recommended: Sceneries belonging to this category represent preferred ethical behaviour of a machine.However,these scenarios are not necessary and may or may not be permitted.

Obligatory:This category includes scenarios that are essential ethical requirements that a machine must fulfil.

The ethics categories are inspired by Islamic jurisprudence,called ‘fiqh’ in the Arabic language which classifies actions into five groups based on their ethical status: ‘haram’(forbidden),‘makruh’ (disliked),‘mubah’(permissible),‘mustahab’ (recommended) and‘fard’ (obligatory’) [29].The ethical desirability of the categories increases from forbidden to obligatory in the order FDPRO (forbidden,disliked,permissible,recommended,obligatory).However,such classification of ethics has not been considered from engineering implementation perspective.

Each scenario that may occur between a machine and its context may belong to any one of these ethics categories.Utilising these categories enables us to have flexibility in representing ethical requirement of smart devices.Ethical status of a scenario can be conveniently represented using one of these five ethics categories,rather than simply a binary decision of forbidden and obligatory.While as forbidden scenarios are always denied and obligatory always allowed,scenarios belonging to other three categories are either allowed or denied based on ethical expectations of device context (ethics mode).Such a mechanism enables a machine to exhibit varying ethical behaviour depending on context.

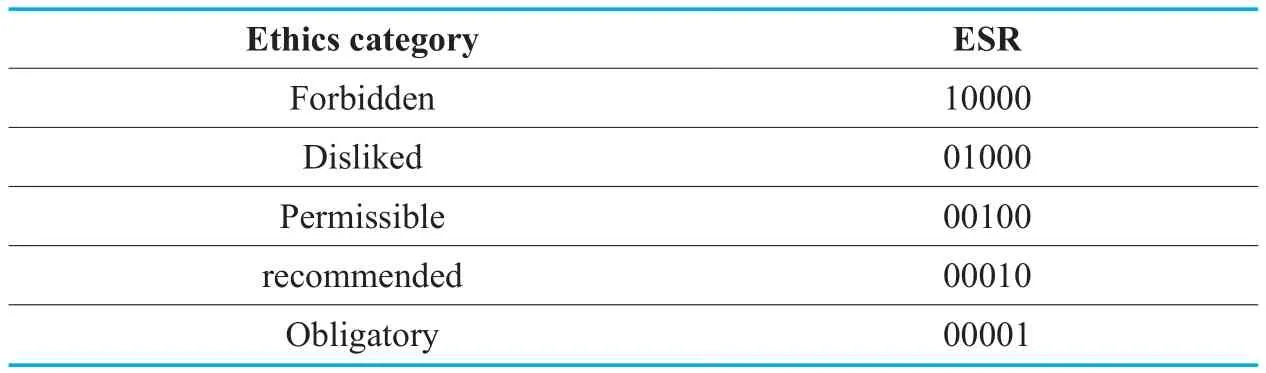

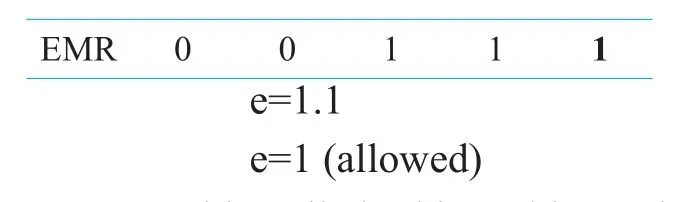

We use a register called Ethics Status Register (ESR) to indicate ethics category of a given scenario.It is a 5-bit register,each bit used to represent one of the ethics categories in the order FDPRO (forbidden,disliked,permissible,recommended,obligatory).Ethics category of a given scenario is represented by setting the corresponding bit in the ESR,while others are initialised to 0 (unset).For example,a scenario belonging to recommended category is indicated by ESR value: 00010.ESR values for various ethics categories are shown in Table 1.

In order to decide whether to allow or disallow a given scenario belonging to any of the ethics categories we define four ethics modes.We assume that forbidden category scenarios are always denied and obligatory scenarios always permitted.Ethics mode enables a smart device to exhibit varying ethical characteristics depending on which one of the four modes is enabled.The ethics mode applicable is determined by ethical expectations of anapplication context.The four ethics modes are defined below:

Table I.ESR values for various ethics categories.

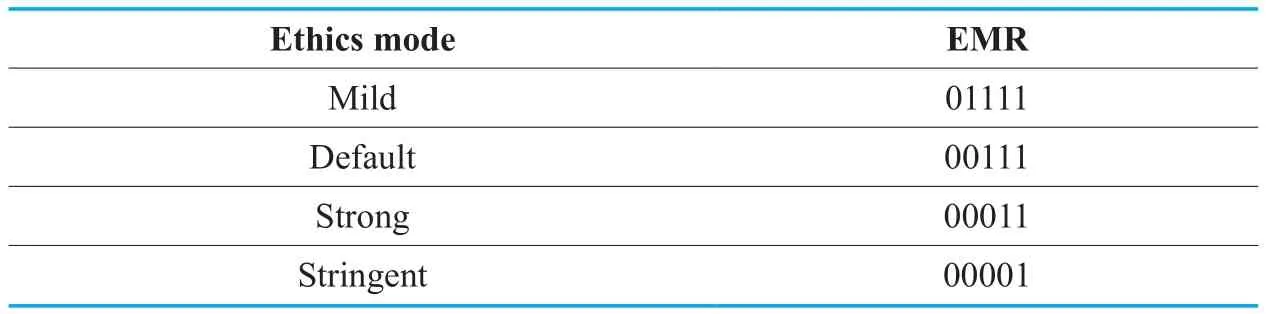

Table II.EMR values of ethics modes.

Mild:Mild mode allows all scenarios expect the forbidden ones and may be suitable for those applications that have very lenient ethical considerations e.g.sensing environment.

Default:Default mode allows scenarios corresponding to permissible,recommended and obligatory categories but denies those corresponding to forbidden and disliked.This mode may be appropriate for majority of modern services e.g.various IoT applications.

Strong:Strong mode denies scenarios corresponding to forbidden,disliked and permissible categories but permits recommended and obligatory ones.Strong mode may be applicable to such areas as household robots,intelligent transportation systems etc.

Stringent: Stringent mode only allows scenarios that are necessary.Hence,only obligatory scenarios are allowed by it,rest are denied.This mode may be appropriate for life critical situations like smart weapons,robotic surgery etc.

In order to specify ethics mode applicable in a given machine we use another register called Ethics Mode Register (EMR).Just like ESR,it is a 5-bit register,where each bit is used for one ethics category in the order of FDPRO.A given mode is indicated by unsetting bits corresponding to ethics categories it denies and setting bits for those it allows.For example,default mode is represented by EMR value of 00111,because it disallows scenarios corresponding to forbidden and disliked categories and allows scenarios belonging to permissible,recommended and obligatory categories.EMR values for various ethics modes are indicated in Table 2.

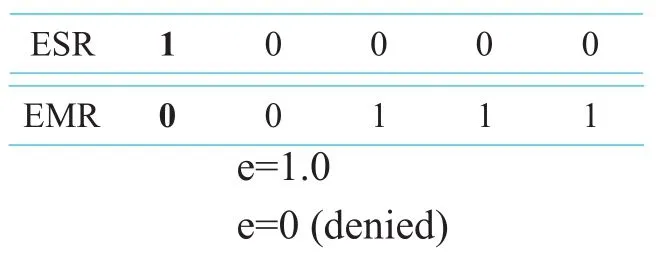

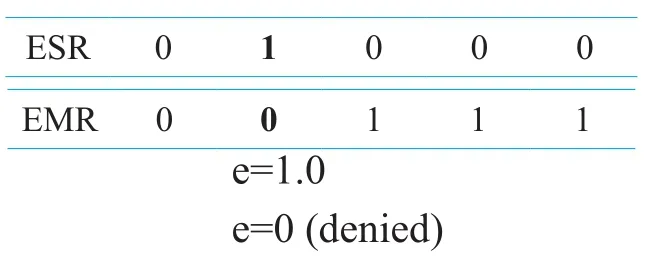

Whether a given scenarios belonging to any one of the five ethics categories is allowed or denied by the ethics mode enabled is specified by a bit called ethics bit.A value of 1 for ethics bit means the scenario is allowed whereas a value of 0 indicates the scenarios is denied.The flowchart of the proposed Boolean method for implementing ethics is shown in figure 1.

3.1 Working of the method

First we identify propositional variables that sufficiently represent EOP of a machine.Using these propositions various scenarios that may occur in the interaction between a smart device and its surroundings are identified.These could include normal device functioning,system malfunctioning,ethical conformity or ethical breach.Ethics category of each scenario is indicated by the respective ESR and EMR is also set to enable the ethics mode most appropriate for the application.For a given scenario to be considered ethically consistent,its ethics category must be allowed by the ethics mode enabled.In other words,the ESR and EMR bits corresponding to the ethics category of the scenario must both be set.

For each scenario of machine functioning,we evaluate a bit called ethics bit by performing logical AND operation of the ESR bit denoting ethics category of the scenario (the bit that is set in FDPRO) with corresponding ethics category bit of the EMR (the bit at the same position in EMR).

Where

e= ethics bit

ESR= Ethics Status Register

EMR= Ethics Mode Register

i= set bit position of the scenario ESR(ranging from 1 to 5)

If the ethics bit for a scenario evaluates to 1,it is deemed ethically consistent and allowed.However,if the value of ethics bit is 0,the scenario is treated as ethically inappropriate and denied.However,it may not be necessary to calculate ethics bit for every scenario.Default value of ethics bit,set or unset,could be used to ref l ect a machine behaviour that allows or disallows scenarios by default,respectively.The algorithm for the method is as under:

Algorithm

1.Represent EOP in terms of propositional variables

2.Identify scenarios and indicate their ethics category (ESR)

3.Set appropriate ethics mode (EMR)

4.Evaluate ethics bit for scenario

5.Allow or deny scenario based on the value of ethics bit

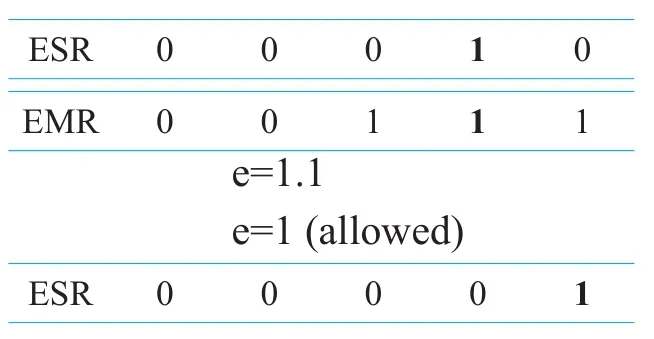

Let us consider the evaluation of ethics bit(e) for five possible ethics categories using default mode of ethics (EMR=00111).The corresponding bits from ESR and EMR involved in logical AND operation are shown in bold.

3.1.1 Forbidden category

3.1.2 Disliked category

3.1.3 Permissible category

3.1.4 Recommended category

3.1.5 Obligatory category

We use a table called ethics table to show the value of ethics bit,e for scenarios belonging to various ethics categories under different ethics modes as shown in Table 3.

IV.IMPLEMENTATION OF THE PROPOSED METHOD

In order to illustrate working of the proposedBoolean method that incorporates several ethics categories and ethics modes,we consider EOP for a healthcare device that measures health condition of a patient and sends health status messages to close relatives and friends.Instead of ascertaining whether a recipient is authorised or not to receive the message,we assume authorisation but classify recipient into relative(s) and friend(s).The device EOP is as under:

Table III.Ethics table.

Table IV.Context table for the healthcare device.

It is obligatory for the healthcare device to inform the relative(s) in case of emergency while informing the friend(s) is recommended.The device may also share health status message with relative(s) and friend(s) even if there is no emergency,though it is recommended not to disturb them unnecessarily.Although,the device is not legally bound to send message to friends in case of emergency,such behaviour is disliked.Also,it is forbidden for the healthcare device not to inform the relative(s)in the event of an emergency.

The above ethical requirement for the healthcare device is modelled in MATLAB using three Boolean variables e,r and m that represent:

e: health emergency occurred

r: recipient is relative of patient

m: send heath status message

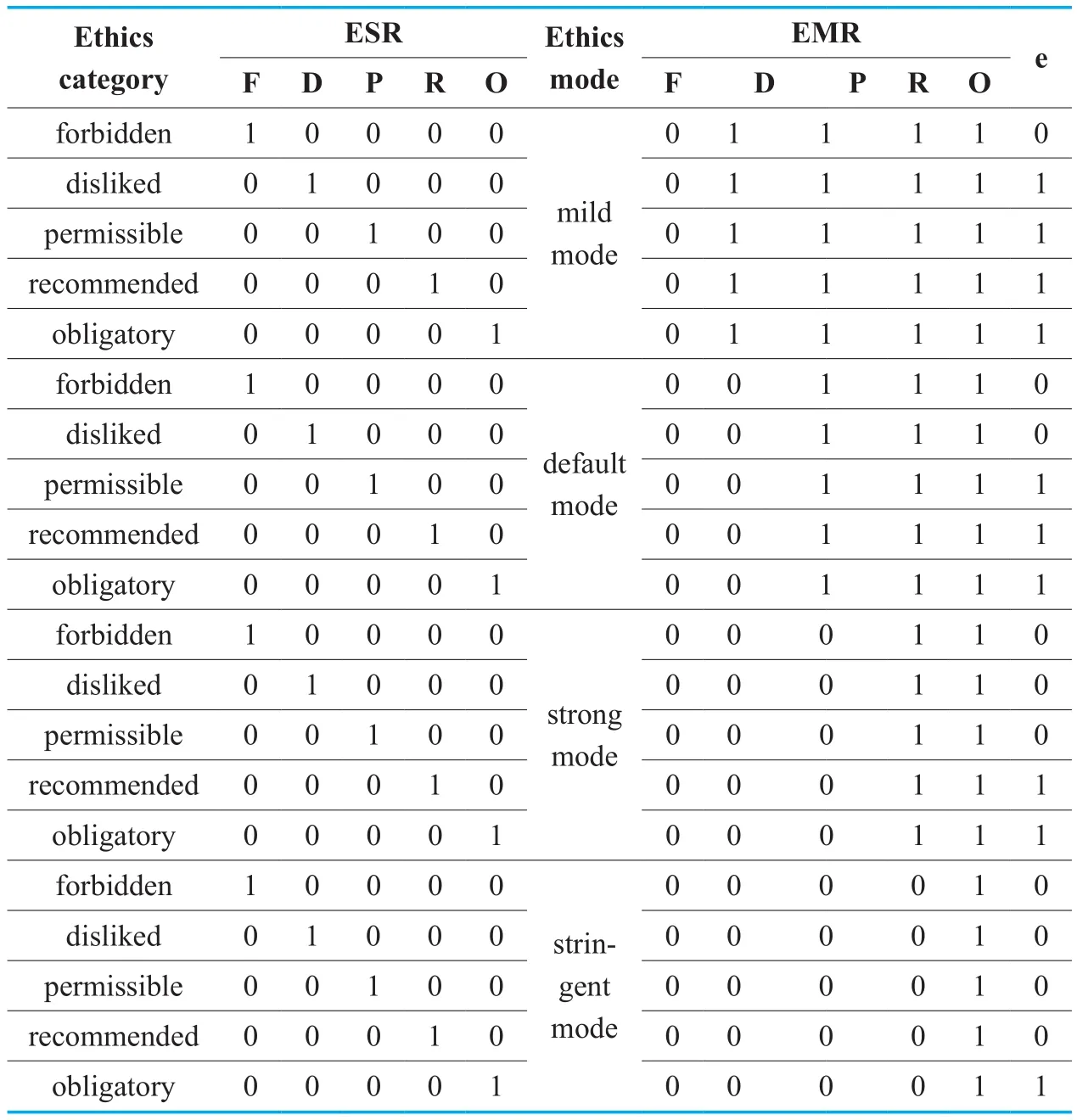

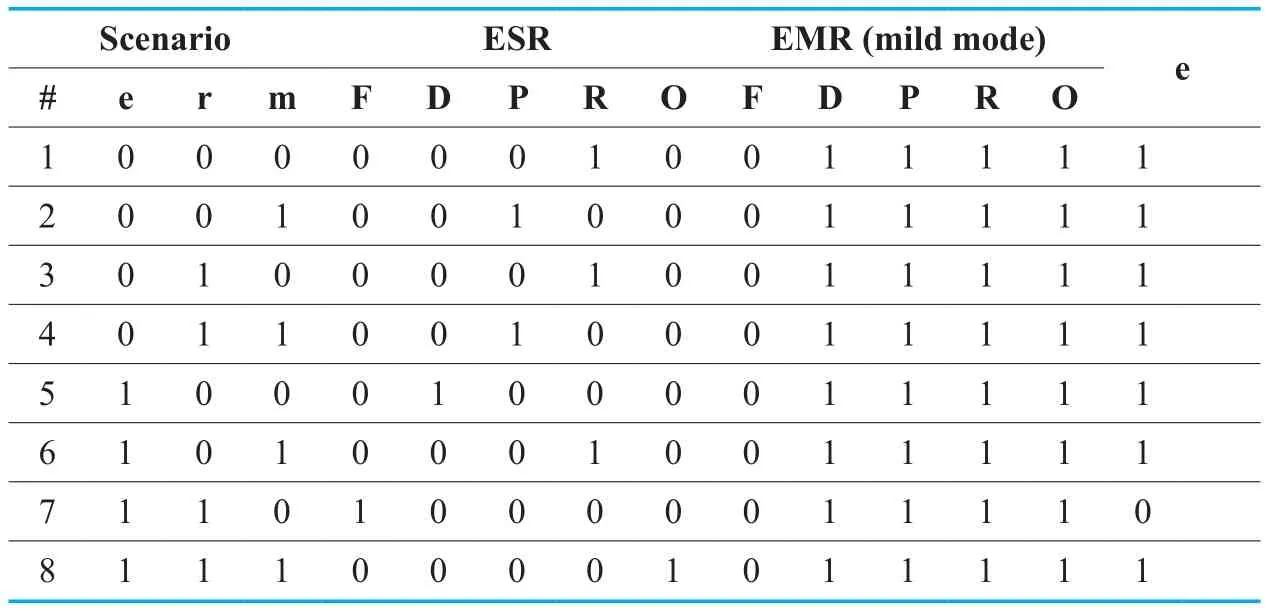

Using the above three variables we represent different possible scenarios that may occur during the smart device functioning in the context table,as indicated in Table 4.The table uses 1 and 0 to represent true or false value for the propositional variables.We use propositional variable r to qualify relationship i.e.r=1 represents a relative while r=0 denotes a friend.If the healthcare device does not detect any emergency situation,recent health update about the patient is sent.The meaning implied by different combination patterns of the variables and their ethical status as per the device EOP is also indicated in the table.The simulation uses a matrix called Ethics Matrix(EM) to keep track of ethical status of each scenario.

The simulation uses a 5-bit Boolean variable ESR to represent ethics category of each scenario and ethics mode applicable is indicated by another 5-bit variable EMR.Whether a particular scenario is deemed ethically consistent by an ethics mode is decided by the value of ethics bit.Ethics bit for a scenario is calculated by performing logical AND of the set bit in ESR with corresponding bit position in EMR.

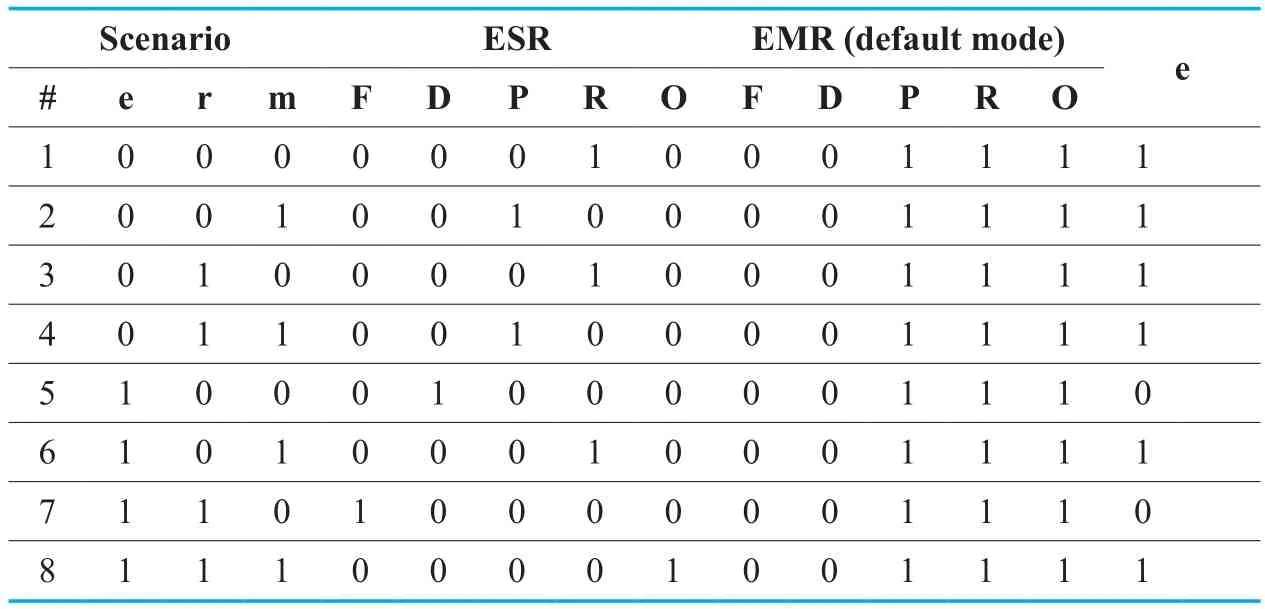

Ethics table for the smart healthcare device indicating various scenarios,the values of ESR,EMR and corresponding ethics bit for the four ethics modes is given in Tables 5-8.The ethics bit is indicated by ‘e’ in the ethics tables.

Although,ethical status of all 8 scenarios for the healthcare device is indicated explicitly,it is not necessary.Depending on the type of application,scenario may be denied or allowed by default by setting default ethics to deny or allow,respectively.The simulation uses random binary stream of length 100 for the three variables to model various scenarios that may occurs in the machine context over a time period.

V.ANALYSIS OF RESULTS

We consider the following results for analysis of the proposed method of implementing ethics,in the context of the healthcare device:

5.1 Ethics categories

A simulation run randomly chooses a scenario among the 8 possible scenarios (23) in the context table of smart healthcare device.Number of simulation runs corresponding to each ethics category during the 100 runs of simulation is shown figure 2.Since only onc scenario in the context table belongs to forbidden,disliked and obligatory categories each,similar number of runs occur for these three categories.Permissible category has two scenarios explaining higher count of corresponding runs in the bar graph.Since three scenarios correspond to recommended category,it has the highest number of runs.

5.2 Ethics profile

The percentage of simulation runs belonging to each ethics category is indicated by ethics profile.The relative percentage for different ethics categories depends on the number of scenarios belonging to each ethics category in the context table of the healthcare device,asexplained previously.Whether a given simulation run is ethically feasible or not is decided by the ethics mode applicable.For each ethics mode namely mild,default,strong and stringent,ethics categories regarded allowable are shown offset in the respective pie chart.

Table V.Ethics table for the healthcare device (mild mode).

Table VI.Ethics table for the healthcare device (default mode).

Table VII.Ethics table for the healthcare device (strong mode).

Since mild mode represents the most lenient form of ethical requirement where a device is allowed to perform all activities except the forbidden,slices corresponding to disliked,permissible,recommended and obligatory are offset in figure 3(a),indicating ethics profile for mild mode.The default mode narrows the set of allowable categories by denying the disliked class also.Due to this,pie chart for default mode shown in figure 3(b) only indicates permissible,recommended and obligatory categories offset from the pie.In a similar fashion,ethics profile for strong mode shown in figure 3 (c) has slices corresponding to recommended and obligatory categories offset.Stringent mode represents a strict form of ethical requirement that allows only the obligatory category and denies all other.This is shown in figure 3 (d) where only the slice corresponding to obligatory region is offset from the pie.

Table VIII.Ethics table for the healthcare device (stringent mode).

5.3 Ethics pulse

Various scenarios that occur in the functioning of the smart healthcare device are classified into five ethics categories viz.forbidden,disliked,permissible,recommended and obligatory.To decide whether or not a particular scenario is acceptable ethically,ethics modes are used.All the four ethics modes i.e.,mild,default,strong and stringent,allow obligatory and deny the forbidden scenarios.Scenarios belonging to other categories may be allowed or denied depending on the ethics mode enabled.The binary decision taken by a machine as regards ethical feasibility of a given scenario is signified by the ethics pulse.A high ethics pulse indicates the scenario is allowed whereas a low ethics pulse means the scenario is denied.

Ethics pulse for the 100 runs of simulations using each of the four ethics modes is represented by ethics pulse train.

It is observed from the figure that mild mode has the highest number of positive pulses as seen in figure 4(a).This is easily understood knowing the fact that mild mode allows all scenarios except the forbidden.The number of positive pulse is seen to decrease progressively from mild mode to stringent mode,with stringent mode having the lowest number of positive pulses.Since default mode allows the permissible scenarios but strong mode regards them ethically inconsistent,the number of positive pulses in default mode,Fig.4(b),is higher than the strong mode,Fig.4(c).Sparse occurrence of positive pulses in the pulse train corresponding to stringent mode shown in figure 4(d) is obvious because only it allows only the obligatory.

5.4 Scenarios status

Based on the decision made by a machine on the ethical complicity of a scenario,it is either allowed or denied.For the 100 runs of simulation,the number of scenarios (runs) allowed or denied by each ethics mode is indicated in the subsequent bar graphs.It is easily noticed from the figure that the number of allowed scenarios decreases from mild mode to stringent mode and the number of denied scenarios increases proportionately.For a total of 100 scenarios mild mode allows 90 as shown in figure 5(a).This is followed by default mode that allows 77 as indicated in figure 5(b).Strong mode for the simulation has equal number of denied and allowed scenarios i.e.50,as observed in figure 5(c).Lastly,for the stringent mode the number of allowed scenarios drops to 12 as shown in figure 5(d).

5.5 Ethics staircase

Ethical desirability of a given simulation run can be inferred from the ethics category of the scenario it triggers.Forbidden scenarios are ethically least desirable while as obligatory ones are ethically most desirable.We classify ethical desirability into 5 levels called ethics levels,corresponding to the five ethics categories.We use the term,ethics staircase to represent ethics level (ethical desirability) of various scenarios that a machine comes across during its operation.Just as humans encounter situations of varying ethical desirability on a daily basis,ethical desirability of scenarios faced by a machine is indicated by ethics staircase.For the given simulation,ethics staircase of the healthcare device is shown in figure 6.Since the simulation triggers various scenarios in the context table of the device randomly,ethics level of the 100 runs is seen to vary randomly from level 1 to level 5.

VI.DISCUSSION

The method proposed in this work relies on the pivotal concept of ethics categories and ethics modes.The choice of ethics category for a scenario or the ethics mode applicable in a given social context may be decided in consultation with thinkers,ethicists,jurists,domain experts,or people using the technology.Although we have used only five ethics categories,more granular ethical requirement may also be implemented by adding more bits to ESR e.g.for strongly recommended,weakly recommended etc.In such case,the number of ethics modes would increase proportionately to imbue a machine with larger range of ethical behaviour.Moreover,ethics mode for a given application need not be fixed and could be changed dynamically to better express required ethical behaviour.

The proposed method offers several advantages over the previous approach of implementing EOP [15].Firstly,the previous method could only represent three types of ethics categories viz.obligatory,permissible and forbidden,but the proposed Boolean method is also able to implement recommended and disliked categories.Secondly,ethics category of scenarios was indicated implicitly in the values of the manners map in a tedious manner.However,the proposed method indicates ethics category explicitly in the ESR in a convenient fashion.Moreover,instead of using manners table,it uses ethics bit to decide whether a given scenario is ethically acceptable.Thirdly,the previous method could only implement a static ethical response; however proposed Boolean method enables a machine to exhibit varying ethical behaviour by changing the ethics mode.The method of implementing ethics outlined in this paper promises numerous applications e.g.ethical gadgets,ethical toys,ethical cars,ethical homes etc.It offers a way to design socially responsible technologies that go beyond functional requirements and incorporate ethical considerations also.

VII.CONCLUSION

The Internet of Things (IoT) represents a promising technology with far reaching consequences for all aspects of modern society.The vast application domain of IoT has the potential to offer numerous benefits to people like smart healthcare,smart cars,smart homes etc.Nonetheless,ever increasing presence of AI enabled smart things in our daily lives capable of executing autonomous decisions with possible repercussions for human beings raises serious ethical concerns.Ignoring the potential ethical chaos that may result as machines are increasingly endowed with artificial intelligence could be dangerous.

In this work,we proposed a method based on Boolean algebra that is able to implement ethics relevant to a machine context by using the concept of ethics categories and ethics modes.The moral,ethical or legal considerations pertinent to a smart device functioning are collectively referred to as Ethics of Operation (EOP).Propositional variables related to input or output that sufficiently capture the device EOP are identified.Ethical desirability (ethics category) of various scenarios that could result during machine operation is indicated in the corresponding Ethics Status Register (ESR).Based on the application domain or ethical expectations of a device functioning,appropriate ethics mode is enabled by specifying the value of Ethics Mode Register (EMR).On the basis of the value of ESR and EMR,ethics bit of a scenario is evaluated to decide whether or not it is ethically acceptable.The method offers a possible solution to facilitate ethical design of numerous home and industry applications e.g.ethical appliances,ethical robots,ethical cars,ethical smart city etc.We hope our work will motivate researchers to work further toward implementing ethics in machines,fostering a technology society that respects core human values.

- China Communications的其它文章

- Convolutional Neural Networks Based Indoor Wi-fiLocalization with a Novel Kind of CSI Images

- A Study on Service-Oriented Smart Medical Systems Combined with Key Algorithms in the IoT Environment

- Research on Multicloud Access Control Policy Integration Framework

- LLR Processing of Polar Codes in Concatenation Systems

- Cooperative Relay Based on Exploiting Hybrid ARQ

- Initialization for NMF-Based Audio Source Separation Using Priors on Encoding Vectors